Why Binary Descriptors?

Following the previous post on descriptors, we’re now familiar with histogram of gradients (HOG) based patch descriptors. SIFT[1], SURF[2] and GLOH[3] have been around since 1999 and been used successfully in various applications, including image alignment, 3D reconstruction and object recognition. On the practicle side, OpenCV includes implementations of SIFT and SURF and Matlab packages are also available (check vlfeat for SIFT and extractFeatures in Matlab computer vision toolbox for SURF).

So, if there no question about SIFT and SURF performance, why not use them in every application?

Remember that SIFT and SURF are based on histograms of gradients. That is, the gradients of each pixel in the patch need to be computed. These computations cost time. Even though SURF speeds up the computation using integral imags, this still isn’t fast enough for some applications. Also, SIFT and SURF are patent-protected (that’s why they’re named “nonfree” in OpenCV ). (UPDATE: SIFT patent expired in March 7th 2020 ref: https://patents.google.com/patent/US6711293B1/en)

This is where binary descriptors come in handy. Imagine one can encode most of the information of a patch as a binary string using only comparison of intensity images. This can be done very fast, as only intensity comparisons need to be made. Also, if we use the hamming distance as a distance measure between two binary strings, then matching between two patch descriptions can be done using a single instruction, as the hamming distance equals the sum of the XOR operation between the two binary strings. This is exactly the common line and rational behind binary descriptors.

In the following series of posts I’ll explain all one needs to know on binary descriptors, covering BRIEF[4], ORB[5], BRISK[6] and FREAK[7], including some examples of OpenCV usage and performance comparison. But first, let’s lay the common grounds by giving a short introduction, explaining the commonalities of the family of binary descriptors.

Introduction to Binary Descriptors

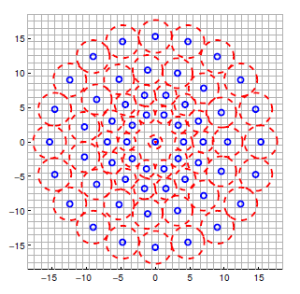

In general, Binary descriptors are composed of three parts: A sampling pattern, orientation compensation and sampling pairs. Consider a small patch centered around a keypoint. We’d like to describe it as a binary string. First thing, take a sampling pattern around the keypoint, for example – points spread on a set of concentric circles. Below you can look at BRISK’s (left) and FREAK’s (right) sampling pattern.

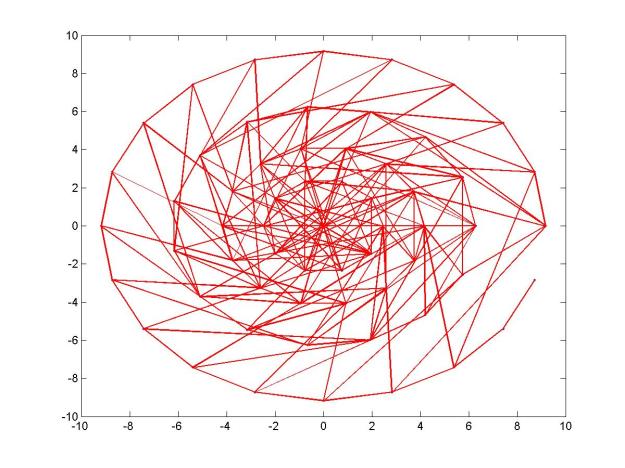

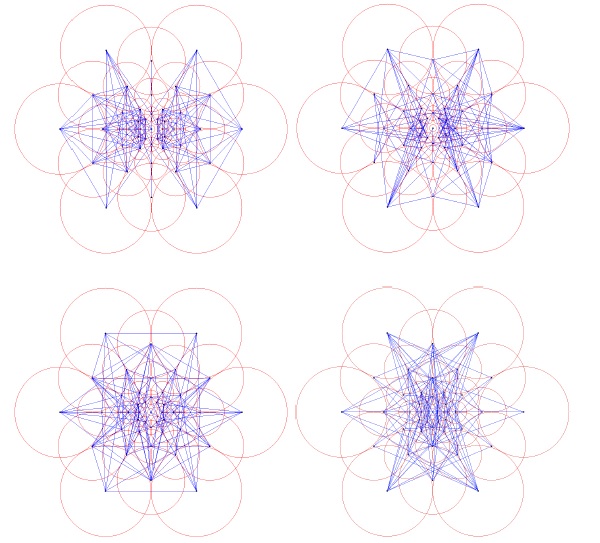

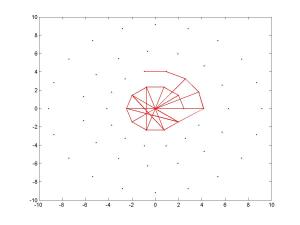

Next, choose, say, 512 pairs of points on this sampling pattern. Now go over all the pairs and compare the intensity value of the first point in the pair with the intensity value of the second point in the pair – If the first value is larger then the second, write ‘1’ in the string, otherwise write ‘0’. That’s it. After going over all 512 pairs, we’ll have a 512 characters string, composed out of ‘1’ and ‘0’ that encoded the local information around the keypoint. In the first figure of the post is BRISK’s pairs and Below you can take a look at FREAKS’s pairs:

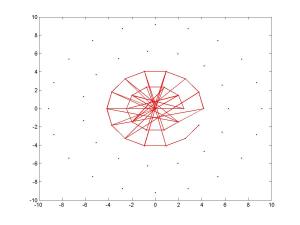

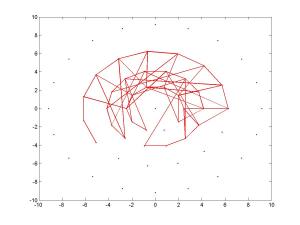

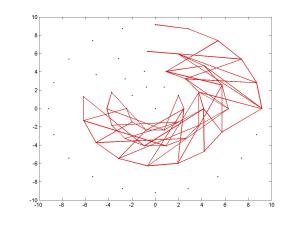

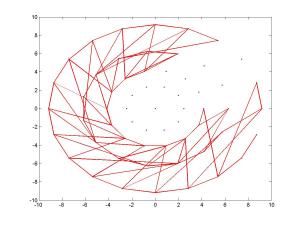

And here are five figures presenting BRISK’s pairs again, where each figure presented only 100 pairs:

What about matching? Given two keypoint, first apply the above procedure on both of them, using of course, the same sampling pattern and the same sequence of pairs. Once will have two binary string, comparing them is easy (and fast) – just count the number of bits where the strings diff, or equivalently apply sum(xor(string1,string2)).

You probably noticed I skipped the second part of orientation compensation; just wanted to explain the basics before we elaborated on the use of orientation. Well, suppose we take a patch and describe it as a binary string using the procedure explained above. Now we rotate the patch by some theta and again extract a binary string describing the rotated patch. Will both description strings be similar? Probably not, as the pattern rotated, but the pairs remained the same.Orientation compensation is a phase where the orientation angle of the patch is measured and the pairs are rotated by that angle to ensure that the description is rotation invariant.

Every binary descriptor has its own sampling pattern, own method of orientation calculation and its own set of sampling pairs. I’ll conclude this post with a table comparing all the binary descriptors in those respects. I know these are all buzzwords , but in the following posts I will talk about every descriptor in detail, and hopefully, everything will become clear.

| Sampling pattern | Orientation calculation | Sampling pairs | |

| BRIEF | None. | None. | Random. |

| ORB | None. | Moments. | Learned pairs. |

| BRISK | Concentric circles with more points on outer rings. | Comparing gradients of long pairs. | Using only short pairs. |

| FREAK | Overlapping Concentric circles with more points on inner rings. | Comparing gradients of preselected 45 pairs. | Learned pairs. |

Hope to see you in the part 2 of this tutorial which will cover the BRIEF descriptor.

Gil.

References:

[1] Lowe, David G. “Object recognition from local scale-invariant features.”Computer vision, 1999. The proceedings of the seventh IEEE international conference on. Vol. 2. Ieee, 1999.

[2] Bay, Herbert, Tinne Tuytelaars, and Luc Van Gool. “Surf: Speeded up robust features.” Computer Vision–ECCV 2006. Springer Berlin Heidelberg, 2006. 404-417.

[3] Mikolajczyk, Krystian, and Cordelia Schmid. “A performance evaluation of local descriptors.” Pattern Analysis and Machine Intelligence, IEEE Transactions on27.10 (2005): 1615-1630.

[4] Calonder, Michael, et al. “Brief: Binary robust independent elementary features.” Computer Vision–ECCV 2010. Springer Berlin Heidelberg, 2010. 778-792.

[5] Rublee, Ethan, et al. “ORB: an efficient alternative to SIFT or SURF.” Computer Vision (ICCV), 2011 IEEE International Conference on. IEEE, 2011.

[6] Leutenegger, Stefan, Margarita Chli, and Roland Y. Siegwart. “BRISK: Binary robust invariant scalable keypoints.” Computer Vision (ICCV), 2011 IEEE International Conference on. IEEE, 2011.

[7] Alahi, Alexandre, Raphael Ortiz, and Pierre Vandergheynst. “Freak: Fast retina keypoint.” Computer Vision and Pattern Recognition (CVPR), 2012 IEEE Conference on. IEEE, 2012.

Pingback: A tutorial on binary descriptors – part 2 – The BRIEF descriptor | Gil's CV blog

Pingback: A tutorial on binary descriptors – part 3 – The ORB descriptor | Gil's CV blog

Pingback: A tutorial on binary descriptors – part 4 – The BRISK descriptor | Gil's CV blog

Pingback: A tutorial on binary descriptors – part 5 – The FREAK descriptor | Gil's CV blog

Thank you, very much. Now I’m understanding how descriptors are constructed.

Please, do the BinBoost binary descriptor!

Maybe after I’ll write the post about evaluation.

http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=6619214&tag=1

And the pdf: http://cvlabwww.epfl.ch/~lepetit/papers/trzcinski_cvpr13.pdf

Thank you so much. This was very Helpful.

Thanks for the great tutorials! Crystal clear explanation!

Could you please provide some OpenCV/Matlab based implementations of the various binary descriptors mentioned here? And please add BinBoost binary descriptor as well. I see its the fastest when it comes to computation time! Would be very helpful! Thanks again 🙂

Hi Shekhar,

Thanks for your kind words.

Regarding implementation, OpenCV offers implementations of BRIEF, BRISK, ORB and FREAK. Implementations of BinBoost and D-BRIEF are available on the authors webside.

I’m currently working on adding BinBoost descriptor to OpenCV. I hope that once I’ll have it complete, I’ll add a post about BinBoost.

Gil

Hey guys,

I’ve been studying the BinBoost paper and I noticed it is not scale and rotation invariant. That’s a big disadvantage, isn’t it! I think it limits the application areas very much. What’s your opinion?

Hi,

I’ve downloaded the BinBoost sources, integrated them into OpenCV and evaluated them on the Oxford benchmark.

BinBoost is scale and rotation invariant. It achieves that by rotating and scaling the patch prior to extracting the descriptor. The thing is, the BinBoost descriptor doesn’t incorporate a mechanism for measuring the orientation of the patch, it just uses the orientation and scale given by the detector (which is SURF according to the sources).

Please let me know if you have any other questions or comments and thanks for your interest in my blog.

Gil

hi Gil,

For multi object tracking, which is extractor, descriptor and matcher i should use?

Thank for article.

Hi Bill,

Thanks for your interest in my blog.

Your question is quite broad. I’m afraid I’m not that familiar with multi-object tracking, but for real time purposes binary descriptors are usually more suitable than the heavy floating point descriptors.

I can refer you to some projects on object tracking that are not related to binary descriptors, if you’d mail me at gil.levi100@gmail.com.

Gil

Great summary, have been reading the papers of those methods for the last few weeks and it sums it pretty well!

That is one hell of an amazing tutorial! It has cleared many of my doubts. Thank you very much!

Thank you for your kind words.

Gil.

Pingback: OpenCV Textured object Tracking Using Filter | Ngoding

Pingback: Adding rotation invariance to the BRIEF descriptor | Gil's CV blog

Hi,it’s very nice from your part to share with us your knowledge , can you please explain more “Orientation Compensation”, because for me if we rotate the patch, pairs (are rotated) but steal the same so strings also don’t change,and we don’t need to rotate them by that angle named theta by you. Thanks and forgive me my English.

Hi,

Say that you want to compare 2 patches from 2 different images where one image has underwent rotation.

Since one patch is a rotated version of the other one, the sampling pairs won’t correspond to the same pixels. To help account for that, we would like to normalize the two patches to the same orientation.

To this end, some descriptors devise a mechanism to measure the patch orientation and to normalize all input patches to some normalized orientation.

Gil.

This is the most concise, upto the point and amazingly easily understandable post about binary descriptors. I wish you had written a book on computer vision !!

Thank you for your kind words.

Hi,

I really appreciate how precisely you explained the image descriptors. I am working on videos for action recognition. Can you please suggest me some video descriptors which are best for action recognition. i am using MATLAB

Hi,

Thank you for your interest in my blog.

I’m not an expert on Action Recognition from videos, but I am familiar with two video descriptors that were used for these purposes:

Motion Interchange Patterns:

http://www.openu.ac.il/home/hassner/projects/MIP/

Space-Time Interest Points:

http://www.di.ens.fr/~laptev/interestpoints.html

Best,

Gil

Gil, that’s simply great and i’m deeply thankful for your efforts you are spending on this blog! Keep it up please and all the best for you!

Thank you for your kind words!

Thank you for this tutorial. It’s really helpful for me.

And I have question: when you detect key points, do you use same method for binary descriptor as for patch descriptor?

Hi,

Thank you for your interest in my blog.

The binary descriptor is used to describe the patch around the keypoint.

Best,

Gil

Hi,

It’s nice. I am studying and playing fast+binary descriptors with flann LSH. You explain very clearly about binary descriptors. Thanks

Thanks!

Pingback: Performance Evaluation of Binary Descriptor – Introducing the LATCH descriptor | Gil's CV blog

Pingback: Age and Gender Classification using Deep Convolutional Neural Networks | Gil's CV blog

Hi Gil,

thanks for the intuitive tutorials. I was wondering if you could clarify something…how and what information is recorded at each ORB descriptor. I understand that it’s a 32 byte binary descriptor but it’s not clear to me what information about a keypoint is recorded. Also, what’s the worst Hamming distance that we could get when comparing the similarities between two ORB binary descriptors?

Thanks again!

Hi,

Thank you for your interest in my blog.

Keep in mind that the 32 bytes are stored as 32 unsigned chars (numbers between 0 and 255) and each unsigned chars is actually a vector of 8 binary bits. This gives us a total of 32*8 = 256 bits.

The worst Hamming distance between two ORB binary descriptors is the largest distance between two 256 bits binary vectors which is 256.

Hope that helps, please let me know if you have any more questions or concerns.

Best,

Gil

Thanks for the clarification and the quick response Gil!

What information about a keypoint is recorded in each descriptor? For instance, does every byte carry information about the intensity of a neighbouring pixel and its coordinates?

Thanks again!

Hi,

The information recorded in the descriptor is only the binary vector produced by the pixels comparisons.

Best,

Gil

Dear Gill

I am working on logo recognition using OpenCV4Android. have you woked with opencv in android devices?

Besides, what type of descriptor/detector you suggest for logo recognition?

Best

Hi,

I have worked with OpenCV in android devices.

Logo recognition in real-world images is very difficult, so I don’t think common local descriptors are strong enough for this task (of course, can’t be sure without experimenting).

I would instead try Convolutional Neural Networks.

Best,

Gil

Deae Gil

I have a code by which I can recognize high contrast images through ORB method. But this method, as I have experimented does not hve the same good effect on logos. On the other hand, I know i can not be expecting a comprehensive recognition method to recognize all possible logos. The point is that this topic is my Bachelor’s project and I wish to do it by open CV4Android. You may take into account that I am just in the beggining of compiter vision so that my undergraduate studies have been in elec. Eng. and I intend to continue in CS. Long story short, through the “Find Object” program I can see the effect of different detectors/descriptors online using Open Cv implementations. And once i find the proper method, i am done. My question is, which detector and descriptor do you recommend to be used for logos?

Hi,

Thank you for your interest in by blog.

I really don’t know which descriptor will be best for recognizing logos. It’s just a matter of experimenting and testing which works best.

Best,

Gil

great

Thanks for the quick reply.

Is each descriptor carrying the binary vector pixel comparisons for just its central pixel or also the neighbouring pixels too? I’m trying to figure out how each of the 256 bits play a role in defining the keypoint. I want to understand how each bit or each of the 32 bytes affect the performance of the detector.

If there are any references or material that you could point me towards, it would be greatly appreciated. Thanks Gil!

Hi,

For more material on binary descriptors, you can read further and take a look at the other posts on binary descriptors in my blog. I also have references there to the original papers.

Best,

Gil

hi Gil,

that’s a very nice explanation.

one thing that is missing: “the accuracy”. matching descriptors is great, but how can I tell the confidence of each match?

Hi Maayan,

The confidence of each match can be deduced by the descriptors distance. More elaborate mechanisms are the ratio test, fitting a transformation between images and removing outliers via ransac or best buddies similarity.

Pingback: amzn/pc-imaginative and prescient-basics-in-microsoft-excel – Tech 'n' Gadget News

1) SIFT patent is finished since March 7th 2020

ref: https://patents.google.com/patent/US6711293B1/en

2) typo: comaprison -> comparison

Thank you, I corrected in the post.

Pingback: Classification of detectors, extractors and matchers - PhotoLens

Pingback: Classification of detectors, extractors and matchers – Row Coding