In the last few posts we mostly talked about binary image descriptors and the previous post in this line of works described our very own LATCH descriptor [1] and presented an evaluation of various binary and floating point image descriptors. In the current post we will shift our attention to the field of Deep Learning and present our work on Age and Gender classification from face image using Deep Convolutional Neural Networks [2].

Example images from the AdienceFaces benchmark

Our method was presented in the following paper:

Gil Levi and Tal Hassner, Age and Gender Classification using Convolutional Neural Networks, IEEE Workshop on Analysis and Modeling of Faces and Gestures (AMFG), at the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), Boston, June 2015.

For code, models and examples, please see our project page.

New! Tensor-Flow implementation of our method .

Acknowledgements

The presented work was developed and co-authored with my thesis supervisor, Prof. Tal Hassner.

Introduction

Though age and gender classification plays a key role in social interactions, performance of automatic facial age and gender classification systems is far from satisfactory. This is in contrast to the super-human performance in the related task of face recognition reported in recent works [3,4].

Previous approaches for age and gender classification were based on measuring differences and relations between facial dimensions [5] or on hand-crafted facial descriptors[6,7,8]. Most have designed classification schemes tailored specifically for age or gender estimation, for example [9] and others. Few of the past methods have considered challenging in-the-wild images [6] and most did not leverage the recent rise in availability and scale of image datasets in order to improve classification performance.

Motivated by the tremendous progress made in face recognition research by the use of deep learning techniques[10] , we propose a similar approach for age and gender classification. To this end, we train deep convolutional neural networks[11] with a rather simple architecture due to the limited amount of training data available for those tasks.

We test our method on the challenging recently proposed AdienceFaces benchmark[6] and show it to outperform previous methods by a substantial margin. The AdienceFaces benchmarks depicts in-the-wild setting. Example images from this collection are presented in the figure above.

Method

Currently, databases of in-the-wild face images which contain age and gender labels are relatively small in size compared to other popular image classification datasets (for example, the Imagenet dataset[12] and the CASIA WebFace dataset [13]). Overfitting is a common problem when training complex learning models on a limited dataset, therefore we take special care in preventing overfitting in our method. This is done by choosing a relatively “modest” architecture, incorporating two drop-out layers and augmenting the images with random crops and flips in the training phase.

Network Architecture

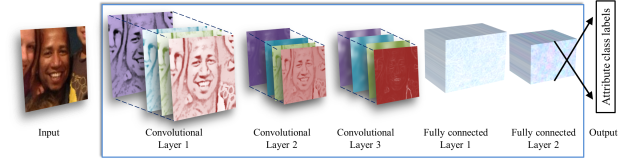

The same network architecture is used for both age and gender classification. The proposed network comprises of only three convolutional layers and two fully-connected layers with a small number of neurons. This architecture is relatively shallow, compared to the much larger architectures applied, for example, in [14] and [15]. A schematic illustration of the network is below:

Illustration of our CNN architecture

The network contains three convolutional layers, each followed by a ReLU operation and a pooling layer. The first two layers also follow an LRN layer [14]. The first Convolutional Layer contains 96 filters of 7×7 pixels, the second Convolutional Layer contains 256 filters of 5×5 pixels, The third and final Convolutional Layer contains 384 filters of 3 × 3 pixels. Finally, two fully-connected layers are added, each containing 512 neurons and each followed by a ReLU operation and a dropout layer.

Experiments

We tested our method on the recently proposed AdienceFaces [6] benchmark for age and gender classification. The AdienceFaces benchmark contains automatically uploaded Flickr images. As the images were automatically uploaded without prior filtering, they depict challenging in-the-wild settings and vary in facial expression, head pose, occlusions, lighting conditions, image quality etc. Moreover, some of the images are of very low quality or contain extreme motion blur. The figure above (first figure in the post) illustrates example images from the AdienceFaces collection. Below is a breakdown of the dataset into the different age and gender classes.

| 0-2 | 4-6 | 8-13 | 15-20 | 25-32 | 38-43 | 48-53 | 60+ | Total | |

| Male | 745 | 928 | 934 | 734 | 2308 | 1294 | 392 | 442 | 8192 |

| Female | 682 | 1234 | 1360 | 919 | 2589 | 1056 | 433 | 427 | 9411 |

| Both | 1427 | 2162 | 2294 | 1653 | 4897 | 2350 | 825 | 869 | 19487 |

Results

We experimented with two methods of classification:

- Center Crop: Feeding the network with the face image cropped to 227 × 227 around the face center.

- Over-sampling: We extract five 227 × 227 pixel crop regions, four from the corners of the 256 × 256 face image and one from the center of the face along with their horizontal flips. All 10 crops are fed to the network and the final classification is the average of the predictions of the 10 crops.

The tables below summarizes our results compared to previously proposed methods. We measure mean accuracy + standard variation, 1-off in age classification means the age prediction was either correct or 1-off from the correct age class:

Gender:

| Method | Accuracy |

| Best from [6] | 77.8 ± 1.3 |

| Best from [16] | 79.3 ± 0.0 |

| Proposed using single crop | 85.9 ± 1.4 |

| Proposed using over-sampling | 86.8 ± 1.4 |

Age:

| Method | Exact | 1-off |

| Best from [6] | 45.1 ± 2.6 | 79.5 ±1.4 |

| Proposed using single crop | 49.5 ± 4.4 | 84.6 ± 1.7 |

| Proposed using over-sampling | 50.7 ± 5.1 | 84.7 ± 2.2 |

Evidently, the proposed network, though it’s simplicity, outperforms previous methods by a substantial margin. We further present misclassification results for our method, both for age and gender classification.

Gender misclassifications: Top row: Female subjects mistakenly classified as males. Bottom row: Male subjects mistakenly classified as females:

Gender misclassifications

Age misclassifications: Top row: Older subjects mistakenly classified as younger. Bottom row: Younger subjects mistakenly classified as older.

Age misclassifications

As can be seen from the misclassification examples, most mistakes are due to blur, low image resolution or occlusions. Furthermore, in gender, most of the misclassifications are in babies or in young children where facial gender attributes are not clearly visible.

Microsoft how-old.net tool

A few months ago, there was a bit hype about Microsoft’s new how-old.net webpage that allow users to upload their images and then it tries to automatically determined their age and gender.

We thought it would be interesting to try and compare MS’s methods with ours and measure their accuracy. To this end, we automatically uploaded all of the AdienceFaces images to the how-old.net page and listed the results. We only got their age estimation result and only in case where MS’s page managed to detect a face in the image (if it the image was too hard for face detection, it would probably fail completely on the much more challenging task of age classification).

MS’s how-old.net site reached an accuracy of about 40%. As listed in the tables above, our network reached 50.7% with over-sampling and 49.5% using single-crop. Below are some examples of images which the MS tool misclassified, but our method classified correctly.

Conclusion

We have presented a novel method for age and gender classification in the wild based on deep convolutional neural networks. Taking into account the relatively small amount of training data, we devised a relatively shallow network and took special care to avoid over-fitting (using data augmentation and dropout layers).

We measured our performance on the AdienceFaces benchmark[6] and showed that the proposed approach outperforms previous methods by a large margin. Moreover, we compared our method against Microsoft’s how-old.net webpage.

For paper, code and more details, please see our project page.

References

[1] Gil Levi and Tal Hassner, LATCH: Learned Arrangements of Three Patch Codes, IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, March, 2016

[2] Gil Levi and Tal Hassner, Age and Gender Classification using Convolutional Neural Networks, IEEE Workshop on Analysis and Modeling of Faces and Gestures (AMFG), at the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), Boston, June 2015.

[3] Sun, Yi, Xiaogang Wang, and Xiaoou Tang. “Deep learning face representation from predicting 10,000 classes.” Computer Vision and Pattern Recognition (CVPR), 2014 IEEE Conference on. IEEE, 2014.

[4] Schroff, Florian, Dmitry Kalenichenko, and James Philbin. “Facenet: A unified embedding for face recognition and clustering.” arXiv preprint arXiv:1503.03832 (2015).

[5] Kwon, Young Ho, and Niels Da Vitoria Lobo. “Age classification from facial images.” Computer Vision and Pattern Recognition, 1994. Proceedings CVPR’94., 1994 IEEE Computer Society Conference on. IEEE, 1994.

[6] Eidinger, Eran, Roee Enbar, and Tal Hassner. “Age and gender estimation of unfiltered faces.” Information Forensics and Security, IEEE Transactions on 9.12 (2014): 2170-2179.

[7] Gao, Feng, and Haizhou Ai. “Face age classification on consumer images with gabor feature and fuzzy lda method.” Advances in biometrics. Springer Berlin Heidelberg, 2009. 132-141.

[8] Liu, Chengjun, and Harry Wechsler. “Gabor feature based classification using the enhanced fisher linear discriminant model for face recognition.” Image processing, IEEE Transactions on 11.4 (2002): 467-476.

[9] Chao, Wei-Lun, Jun-Zuo Liu, and Jian-Jiun Ding. “Facial age estimation based on label-sensitive learning and age-oriented regression.” Pattern Recognition 46.3 (2013): 628-641.

[10] Taigman, Yaniv, et al. “Deepface: Closing the gap to human-level performance in face verification.” Computer Vision and Pattern Recognition (CVPR), 2014 IEEE Conference on. IEEE, 2014.

[11] LeCun, Yann, et al. “Backpropagation applied to handwritten zip code recognition.” Neural computation 1.4 (1989): 541-551.

[12] Russakovsky, Olga, et al. “Imagenet large scale visual recognition challenge.” International Journal of Computer Vision (2014): 1-42.

[13] Yi, Dong, et al. “Learning face representation from scratch.” arXiv preprint arXiv:1411.7923 (2014).

[14] Krizhevsky, Alex, Ilya Sutskever, and Geoffrey E. Hinton. “Imagenet classification with deep convolutional neural networks.” Advances in neural information processing systems. 2012.

[15] Chatfield, Ken, et al. “Return of the devil in the details: Delving deep into convolutional nets.” arXiv preprint arXiv:1405.3531 (2014).

[16] Hassner, Tal, et al. “Effective face frontalization in unconstrained images.” arXiv preprint arXiv:1411.7964 (2014).

Hey i am working on my Final year project and trying to make a application which can tell the Gender,Age,Mood by Face. I trained Haar Cascade for Gender Classification and giving me 69% result on it by image.Now I want to implement on Neural Network(Deep Learning) using Caffe find Gender,Age,Mood can you guide me how i can train my Face Data or about implementation or so this is the best approach for implementing this problem.

Hi,

Thank you for your interest in my blog.

Answering what is the “best” approach would be difficult and it depends on the size of the dataset, it’s variability and the amount of effort you are willing to spend.

Having said that, you might want to take a look at the repository I created which contains the scripts used for training our age and gender models:

https://github.com/GilLevi/AgeGenderDeepLearning

I hope it can help you get started (probably after reading some Caffe tutorial).

Best,

Gil

Hi, I am trying to use your trained model on face images that I collected. How to modify your python code in order to process images with several faces?

Hi Valery,

Thank you for your interest in my project.

You would need to run a face detection algorithm on the images and feed every detected face to the network.

Best,

Gil

Thanks, it is what I am doing. Will be happy to report you our results if you are interested.

Sure.

Any suggestion on making age/gender classification run with opencv’s new dnn module (contrib) / caffe wrapper ?

Hi,

Thank you for your interest in our work.

I can’t think of any particular tips, should be similar to the GoogleNet example:

https://github.com/Itseez/opencv_contrib/blob/master/modules/dnn/samples/caffe_googlenet.cpp

Gil

Any suggestion on making age/gender classification run with opencv’s new dnn module (contrib) / caffe wrapper ?

Hi,

Thank you for your interest in our work.

I can’t think of any particular tips, should be similar to the GoogleNet example:

https://github.com/Itseez/opencv_contrib/blob/master/modules/dnn/samples/caffe_googlenet.cpp

Gil

I was trying to train your model using more data. But I am not able to extract faces from the images. x, y, dx, dy in fold_*_data.txt files doesn’t actually give face bounding box? Can you please help me understand what is x, y, dx and dy in these files

Hi,

You can use the aligned faces provided with the data. No need to crop it and align yourself.

Best,

Gil

Pingback: Emotion Recognition in the Wild via Convolutional Neural Networks and Mapped Binary Patterns | Gil's CV blog

Hi Gil,

I trained the model using a subset of the training data.

I also ran the eval.py in order to evaluate the model on the created run-id.

can you please tell what does precision @1 / precision @2 mean?

And where the gender_test.txt test data is being used.

Thanks in advance,

Jawad

Hi Jawad,

Thank you for your interest in our work.

I’m not sure which code did you use and how eval.py is defined. Can you please mail me the link to gil.levi100@gmail.com?

Best,

Gil

Thank you for sharing. These tips are very useful!

Hi Gil,

Very interesting work! I saw the models available at the Caffe Zoo. Is there a license associated with them? If not would you consider releasing them under Creative Common or MIT license?

Thanks in advance,

Boriana