Since the next few posts will talk about binary descriptors, I thought it would be a good idea to post a short introduction to the subject of patch descriptors. The following post will talk about the motivation to patch descriptors, the common usage and highlight the Histogram of Oriented Gradients (HOG) based descriptors.

I think the best way to start is to consider one application of patch descriptors and to explain the common pipeline in their usage. Consider, for example, the application of image alignment: we would like to align two images of the same scene taken at slightly different viewpoints. One way of doing so is by applying the following steps:

-

Compute distinctive keypoints in both images (for example, corners).

-

Compare the keypoints between the two images to find matches.

-

Use the matches to find a general mapping between the images (for example, a homography).

-

Apply the mapping on the first image to align it to the second image.

Let’s focus on the second step. Given a small patch around a keypoint taken from the first image, and a second small patch around a keypoint taken from the second image, how can we determine if it’s indeed the same point?

In general, the problem we are focusing on is that of comparing two image patches and measuring their similarity. Given two such patches, how can we determine their similarity? We can measure the pixel to pixel similarity by measuring their Euclidean distance, but that measure is very sensitive to noise, rotation, translation and illumination changes. In most applications we would like to be robust to such change. For example, in the image alignment application, we would like to be robust to small view-point changes – that means robustness to rotation and to translation.

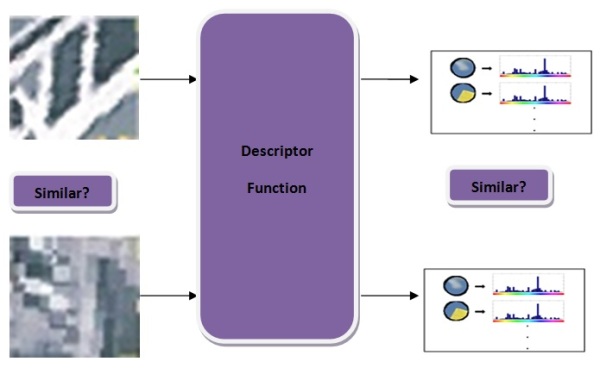

This is where patch descriptors come in handy. A descriptor is some function that is applied on the patch to describe it in a way that is invariant to all the image changes that are suitable to our application (e.g. rotation, illumination, noise etc.). A descriptor is “built-in” with a distance function to determine the similarity, or distance, of two computed descriptors. So to compare two image patches, we’ll compute their descriptors and measure their similarity by measuring the descriptor similarity, which in turn is done by computing their descriptor distance. The following diagram illustrates this process:

The common pipeline for using patch descriptors is:

-

Detect keypoints in image (distinctive points such as corners).

-

Describe each region around a keypoint as a feature vector, using a descriptor.

-

Use the descriptors in the application (for comparing descriptors – use the descriptor distance or similarity) function

The following diagram illustrates this process:

HOG descriptors

So, now that we understand how descriptors are used, let’s give an example to one family of descriptors. We will consider the family of Histograms of Oriented Gradients (HOG) based descriptors. Notable examples of this family are SIFT[1], SURF[2] and GLOH[3]. Of the members of this family, we will describe it’s most famous member – the SIFT descriptor.

SIFT was presented in 1999 by David Lowe and includes both a keypoint detector and descriptor. SIFT is computed as follows:

-

First, detect keypoints using the SIFT detector, which also detects scale and orientation of the keypoint.

-

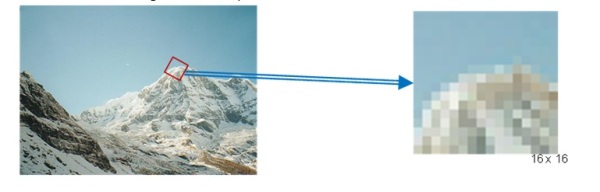

Next, for a given keypoint, warp the region around it to canonical orientation and scale and resize the region to 16X16 pixels.

-

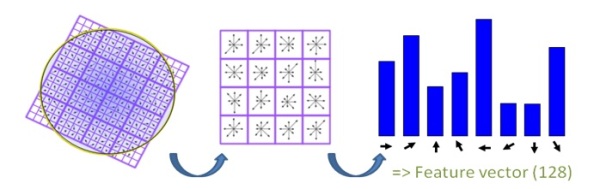

Compute the gradients for each pixels (orientation and magnitude).

-

Divide the pixels into 16, 4X4 pixels squares.

-

For each square, compute gradient direction histogram over 8 directions

-

concatenate the histograms to obtain a 128 (16*8) dimensional feature vector:

SIFT descriptor illustration:

SIFT is invariant to illumination changes, as gradients are invariant to light intensity shift. It’s also somewhat invariant to rotation, as histograms do not contain any geometric information.

Other members of this family, for example SURF and GLOH are also based on taking histograms of gradients orientation. SIFT and SURF are patented, so they can’t be freely used in applications.

So, that’s it for now:) In the next few posts we will talk about binary descriptors which provide an alternative as they are light, fast and not patented.

Gil.

References:

[1] Lowe, David G. “Object recognition from local scale-invariant features.”Computer vision, 1999. The proceedings of the seventh IEEE international conference on. Vol. 2. Ieee, 1999.

[2] Bay, Herbert, Tinne Tuytelaars, and Luc Van Gool. “Surf: Speeded up robust features.” Computer Vision–ECCV 2006. Springer Berlin Heidelberg, 2006. 404-417.

[3] Mikolajczyk, Krystian, and Cordelia Schmid. “A performance evaluation of local descriptors.” Pattern Analysis and Machine Intelligence, IEEE Transactions on27.10 (2005): 1615-1630.

Pingback: Tutorial on Binary Descriptors – part 1 | Gil's CV blog

Pingback: A tutorial on binary descriptors – part 2 – The BRIEF descriptor | Gil's CV blog

Pingback: A tutorial on binary descriptors – part 3 – The ORB descriptor | Gil's CV blog

Pingback: A tutorial on binary descriptors – part 4 – The BRISK descriptor | Gil's CV blog

Hi! thanks for your nice notes.

I wonder if I could use image (at step 5) for my project work? how can I cite your blog.

I appreciate it.

Sure, feel free to use any image you’d like. You can site me as:

Levi Gil, (2013, August 18). A Short introduction to descriptors [blog post], https://gilscvblog.wordpress.com/2013/08/18/a-short-introduction-to-descriptors/

Can I ask What are you trying to do in your project?

Thanks!, actually I was about to do something as you did but when I saw your blog I decided to

use your image instead. I am using HOG as a part of my algorithm for

automatic registration of specific medical markers in X-ray images.

Again Thank you!

: ))

Reblogged this on Cognitive universe.

Pingback: A tutorial on binary descriptors | _(:3」∠)_

Thanks for great explanation , i wonder if you can explain to me how to compare one image with a list of Images using SURF .

Hi Mohammed,

Could you please mail me with your question at: gil.levi100@gmail.com? I don’t want to go into long discussions in the comments.

Best,

Gil

Pingback: Adding rotation invariance to the BRIEF descriptor | Gil's CV blog

Hi, very informative notes. Can you please suggest which local descriptor can i choose to find patch similarity between test image and template image. Also which measure (other than SSD) i can use? I am working in MRI images. Thanks in advance

Hi,

It’s difficult to answer without looking at the images.

Could you please send me some example images to my mail (gil.levi100@gmail.com) along with a description of the problem and I’ll try to help you as best as I can.

Best,

Gil

Hi, I am confused about the orientation of descriptors. When we computer the angle for descriptor orientation before matching, what is the reference angle for that? How would we know which is the right orientation?

Hi,

The angle is defined as the angle of the patch. You can think of it as the “direction in which the patch points”.

This is a toy example, but you can see the following figure from a different post:

Gil.

Hi! Thanks for your notes.

I’m studying the object recognition and I can get ORB descriptor using a vision library,

but I don’t know how to match a tamplate image to a input image.

Could you tell me some method to match the descriptors of ORB?

Thanks in advance.

Hi,

I need more details on the application to answer, but in general you can extract ORB from the template image and the query image and then try to match them using Hamming distance.

However, I don’t recommend to use local descriptors (such as ORB) to object recognition tasks. There are more suitable methods for that purpose.

Gil

Thanks for you reply.

I am studying a on-borad vision system about the object recognition(ex. car) using binary descriptor, ORB because the computation load.

I think I need to study more….

Thanks..!

Hi,

If you have any questions, please feel free to mail me at gil.levi100@gmail.com.

Gil

Pingback: Performance Evaluation of Binary Descriptor – Introducing the LATCH descriptor | Gil's CV blog

Thnx you for your posts.

I am going to read entire blog, because your explanation is understandable to me.

Thank you !

Pingback: Descriptors | Notebook of a Signal Processing Engineer

I have been reading about feature detection and description algorithms for several days, and this is the most clear and concise description of HOG I have come across. It all made sense when I read this. So useful. Thank you! I look forward to reading your other posts.

Thanks!

Hi, I am confused about the orientation of descriptors. When we comput the angle for descriptor orientation before matching, what is the reference angle for that? How would we know which is the right orientation?please More examples .

Hi,

Thank you for your interest in my blog.

Take a look at the post on ORB, I describe ORB’s method of computing orientation with a diagram of the patch angle. I’ll be happy to explain further if you’ll have any questions.

Best,

Gil

Hey mate,

This tutorial is bloody brilliant, I was wondering if you had another one comparing HOG descriptors (SIFT vs SURF vs GLOH etc.). I haven’t found one to this standard and simplicity.

Cheers

Matt

Hi Matt,

Thank you for your kind words.

I have a post where I compare several binary and floating point descriptors. I’m afraid GLOH was not included in the evaluation, but SIFT and SURF were:

Cheers,

Gil

Great Explanation!

Good Luck!

I have one image with 128Xm feature vector. I want to compare similarity between this image and 100 other images with feature vectors 128xn1 to 128Xn100. What is the formula based on euclidean distance?

The most straightforward approach would be to go over the 100 images and for each one do the following:

for each feature vector in the test image, find it’s nearest neighbor feature vector in the query image (one of 100). Then you can take the average distance between the nearest neighbors.

There are more sophisticated approached of course.

Gil

thanks for the explanation, but how to measure the similarity between two features vectors after i got their concatenation of their histograms ?

I used L2 distance.

How ?, Can you show me an example doing this for two features ?

You can see here an example in OpenCV using python:

http://docs.opencv.org/trunk/dc/dc3/tutorial_py_matcher.html

quite helpfull

Thanks!

Hey very informative blog, can you help me with gloh descriptor? i am using it for insulator detection.thank you

Sure, mail me at gil.levi100@gmail.com

Hi,

I want to draw a histogram like the one you posted here, named”concatenating histograms from different squares”.

Actually I used opencv SIFT implementation to find keypoints and descriptors of a bunch of images. I have now descriptors of these images which is a file of numbers. I have no idea how could I use these numbers to draw a histogram for each image/ or for all of them.

Any help would be appreciated gratefully.

Bests

Hi,

The figure that you are referring to is actually just a drawing to illustrate how sift works, it doesn’t hold any real values.

Gil

Pingback: DeepFace : Closing the Gap to Human level Performance in Face Verification – AIpapers

very well explanation. Focus on descriptor instead of both key points detection and matching based on descriptor.

Thank you.

Thank you for good post. I understand clearly.

Thank you for your post!

I am currently evaulating the number of matches against rotation, and I found that SIFT and SURF peak in value every 90 degrees in rotation. Could I ask why is this so? (Does it have smth to do with the 8 directions, 45 degree intervals?)

Hi Lynus,

Thank you for your interest, by rotation do you mean the orientation of the patch or when rotating the image?

Gil