This is our fifth post in the series about binary descriptors and here we will talk about the FREAK[4] descriptor. This is the last descriptor that we’ll talk about as the next and final post in the series will give a performance evaluation of the different binary descriptors. Just a remainder – we had an introduction to binary descriptors and posts about BRIEF[5], ORB[3] and BRISK[2].

As you may recall from the previous posts, a binary descriptor is composed out of three parts:

- A sampling pattern: where to sample points in the region around the descriptor.

- Orientation compensation: some mechanism to measure the orientation of the keypoint and rotate it to compensate for rotation changes.

- Sampling pairs: which pairs to compare when building the final descriptor.

Recall that to build the binary string representing a region around a keypoint we need to go over all the pairs and for each pair (p1, p2) – if the intensity at point p1 is greater than the intensity at point p2, we write 1 in the binary string and 0 otherwise.

In a nutshell, FREAK is similar to BRISK by having a handcrafter sampling pattern and also similar to ORB by using machine learning techniques to learn the optimal set of sampling pairs. FREAK also has an orientation mechanism that is similar to that of BRISK.

Retinal sampling pattern

Many sampling patterns are possible to compare pixel intensities. As we’ve seen in the previous posts, BRIEF uses random pairs, ORB uses learned pairs and BRISK uses a circular pattern where points are equally spaced on circles concentric, similar to DAISY[1].

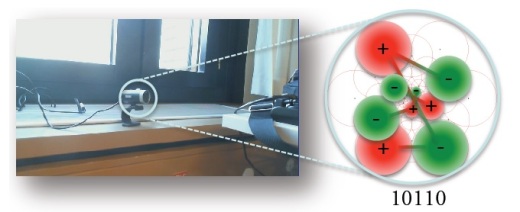

FREAK suggests to use the retinal sampling grid which is also circular with the difference of having higher density of points near the center. The density of points drops exponentially as can be seen in the following figure:

Each sampling point is smoothed with a Gaussian kernel where the radius of the circle illustrates the size of the standard deviation of the kernel.

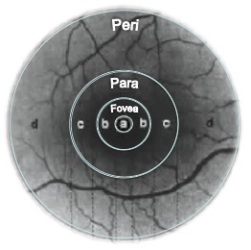

As can be seen in the following figure, the suggested sampling grid corresponds with the distribution of receptive fields over the retina:

Learning the sampling pairs

With few dozen sampling points, thousands of sampling pairs can be considered. However, many of the pairs might not be useful efficiently describe a patch. A possible strategy can be to follow BRISK’s approach[2] and select pairs according to their spatial distance. However, the selected pairs can be highly correlated and not discriminant. Consequently, FREAKS follows ORB’s approach[3] and tries to learn the pairs by maximizing variance of the pairs and taking pairs that are not correlated. ORB’s approach was explained in length in our post about ORB, so we won’t explain it again here.

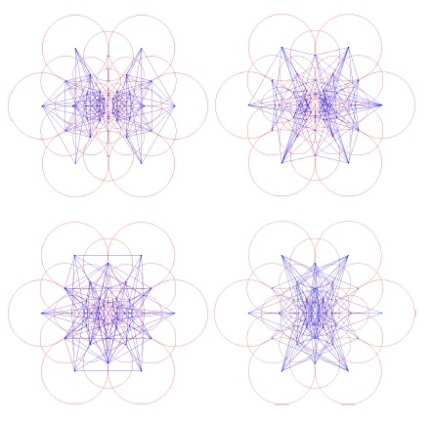

Interestingly, there is a structure in the resulting pairs – a coarse-to-fine approach which matches our understanding of the model of the human retina. The first pairs that are selected mainly compare sampling points in the outer rings of the pattern where the last pairs compare mainly points in the inner rings of the pattern. This is similar to the way the human eye operates, as it first use the perifoveal receptive fields to estimate the location of an object of interest. Then, the validation is performed with the more densely distributed receptive fields in the fovea area.

The sampling pairs are illustrated in the following figure, where each figure contains 128 pairs (from left to right, top to bottom):

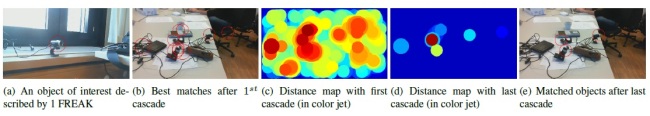

FREAKS takes advantage of this coarse-to-fine structure to further speed up the matching using a cascade approach: when matching two descriptors, we first compare only the first 128 bits. If the distance is smaller than a threshold, we further continue the comparison to the next 128 bits. As a result, a cascade of comparisons is performed accelerating even further the matching as more than 90% of the candidates are discarded with the first 128 bits of the descriptor. The following figure illustrates the cascade approach:

Orientation assignment

To somewhat compensate for rotation changes, FREAK measures the orientation of the keypoint and rotates the sampling pairs my measure angle. FREAK’s mechanism for measuring the orientation is similar to that of BRISK[2] only that instead of using long distance pairs, FREAK uses a predefined set of 45 symmetric sampling pairs:

For more details on orientation assignment, you can refer to the post on BRISK[2].

Performance

Though we’ll have a whole post that will compare the performance of the binary descriptors, we should say a few words now.

- When there are changes due to blur, FREAK performs worse of all of the all the binary descriptors.

- When there are view-point changes, FREAK slightly outperforms ORB and BRISK, but performs worse than BRIEF.

- When there are zoom + rotation changes, FREAK slightly outperforms BRIEF and BRISK, but performs worse than ORB.

- When illumination changes or JPEG compression is present, FREAK slightly outperforms BRISK and ORB, but performs much worse than BRIEF.

The next post will be our last in the series about binary descriptors, which will give a performance evaluation of binary descriptors.

References

[1] Tola, Engin, Vincent Lepetit, and Pascal Fua. “A fast local descriptor for dense matching.” Computer Vision and Pattern Recognition, 2008. CVPR 2008. IEEE Conference on. IEEE, 2008.

[2] Leutenegger, Stefan, Margarita Chli, and Roland Y. Siegwart. “BRISK: Binary robust invariant scalable keypoints.” Computer Vision (ICCV), 2011 IEEE International Conference on. IEEE, 2011.

[3] Rublee, Ethan, et al. “ORB: an efficient alternative to SIFT or SURF.” Computer Vision (ICCV), 2011 IEEE International Conference on. IEEE, 2011.

[4] Alahi, Alexandre, Raphael Ortiz, and Pierre Vandergheynst. “Freak: Fast retina keypoint.” Computer Vision and Pattern Recognition (CVPR), 2012 IEEE Conference on. IEEE, 2012.

[5] Calonder, Michael, et al. “Brief: Binary robust independent elementary features.” Computer Vision–ECCV 2010. Springer Berlin Heidelberg, 2010. 778-792.

Questions about coarse-to-finer search. Why exactly 128 bits? After getting Hamming distance of first 128 bits of two descriptors and if it is inside threshold I need to check 256 bits, 384 bits, 512 bits with own increasing thresholds? Сan we say that in descriptor first bits are more significant than latest bits? Any more information about data correlation or data meaning (data structure) of these 512 bits? Any algorithm for coarse-to-finer search?

Yes, the authors suggest using the first 128 bits and if the distance is lower than a threshold, continue to the next 128 bits (bits 129-256) and check the distance again against a threshold.

The authors state that 90% of the candidates are discarded after the first 128 bits, so I guess it’s just the number that fitted (and perhaps since it’s a power of 2).

I’m not sure you can say the first bits are more significant that the last bit, but they do measure coarse information as the last bits measure fine information.

Please let me know if you have further questions.

Thank you very much 🙂 , this series of posts really helped me understand these methods. A well illustrated concise explanation was exactly what I needed.

I am looking forward to the full performance comparison, coming soon I hope 🙂

Hi Rasmus,

Thanks for your interest in my blog.

I’m afraid that due to personal reasons, I could only publish the evaluation report in a few months.

In the meanwhile, I’m working on other posts that I hope to publish soon.

Best,

Gil.

Dear Gil,

thanks for the excellent introduction to binary descriptors. These short tutorials are really helpful because they highlight the differences between the four descriptor types.

When can we expect the performance comparison?

Jürgen Brauer

Hi Jurgen,

Thanks for your interest in my blog.

Due to personal reasons, I could only publish the post on performance comparison in a few months.

In the meanwhile, I’m working on other posts.

I know it’s been a while since I’ve updated my blog and I’m sorry for that, it is due to some pressure I had with my work and research.

Gil.

Dear Gil:

Thanks for your excellent posts.

I have some questions after reading your posts:

1) How to determine the key points?

2) After the orientation calculation, what to do? Is it right to rotate the sampling pattern and choose pairs?

Best,

Fu.

Dear Fu,

Thanks for your interest in my blog.

1. The keypoints are determined using some sort of keypoint detector, for example: Harris or FAST.

2. After calculating the orientation, the pairs (or the patch) are rotated by the orientation angle.

Please let me know if you have any further questions.

Best,

Gil

After keypoint detection… the next step is to find the sampling pairs.. how to get the sampling pairs ?

The sampling pairs are fixed beforehand. I’m not sure where to get the sampling pairs for BRISK, but the sampling pairs for FREAK are available in the OpenCV implementation: https://github.com/opencv/opencv_contrib/blob/master/modules/xfeatures2d/src/freak.cpp

Thank you so so much, the full evaluation please please please 🙂

Your work is wonderful.

Hi Waqas,

Thank you for your interest in my blog!

Due to personal reasons, I cannot post the full evaluation results. However, if you’re interested, you can mail me at gil.levi100@gmail.com and I’ll send you the results.

Gil.

Hi gillevicv,

Great Post. Thank you!

I have a doubt after I implement FREAK and BRISK code to make evaluation.

BRISK is always faster than FREAK in my testing. but FREAK author claim that it is outperform than BRISK. Can I know what is the problem?

Thank you.

Hope to get your reply soon.

Hi Esther,

Did the FREAK authors wrote that FREAK is faster than BRISK? if they wrote that it outperforms BRISK, then they meant that it’s accuracy is better, not necessarily that the running times are better.

Having said that, FREAK does has an advantage in running times, due to it’s cascade nature (first compare the first 128 and then continue to the next 128 bits if the distance is below a threshold).

In any case, it’s hard to know what the problem is without taking a look at the implementation. Would you like to send me your code to gil.levi100@gmail.com so I could take a look?

Also, as a side note, I would recommend using standard implementations, such as the OpenCV implementations.

Gil

Hello Gil,

Awesome post!

Do you have an idea of the intuition of why BRIEF outperforms the other descriptors when there are viewpoint changes? I’d expect the rotation-invariant descriptors to do better.

Hi Brian,

Thanks for your interest in my blog.

The benchmark that I used for the evaluation is the following: http://www.robots.ox.ac.uk/~vgg/research/affine/

In most cases, the datasets that exhibit viewpoint changes do not exhibit rotation changes. As BRIEF is not rotation invariant, it does not try to account for rotation (which is not there). That’s why it gives superior performance over the rotation-invariant descriptors.

By the way, I’ve been experimenting with a simple rotation invariant version of BRIEF that I developed and it gives nice results in cases where rotation is present. I hope to post about it when I’ll find the time.

Gil

Hello,

First of all thank you for preparing such a great post about these descriptors. Now if you let me I have a couple questions.

I’m using BRISK and FREAK descriptors which has implementations in MATLAB 2014a. I’d expect these descriptors to be bit strings, as defined in the papers, but MATLAB does a little trick. It generates 512 bit strings and than store those string as uint8 such that 512 bit string is equal to 64 byte uint8. So, in a way descriptor is now 64 sized vector instead of 512 sized vector and now hamming distance is not an option.

Here are my questions:

1- What is your intuition about using this 64 dimensional descriptor instead of using 512 dimensional descriptor? Would it give a better or worse performance.? Would using euclidean distance for this enough or which distance metric can you recommend?

2- I can convert 64 byte uint8 to 512 bit string. But 512 is kind of too much for me (because I’ve lots of images and it leads to memory issues). Unfortunately MATLAB API does not allow me to create the descriptor as 256 or 128 bit string (as far as I know). Is it wrong to reduce the dimension to 128 just by taking samples from created 512 bit string? I’m not sure about doing this because both descriptors use a pattern for taking pairs in the first place so I can’t predict the outcome. Can you comment on that?

Thanks in advance

Güney

Hi Güney,

Thanks for your interest in my blog!

Your question are very interesting and I would like to answer you by mail (I don’t want to have long discussions in the comments).

I have you mail, I’ll send you a short mail now, but it would take me a few days to fully answer your questions.

Thanks again,

Gil.

Hi,

Many thanks for the post! I have been testing freak for image classification task. I am using matlab, which converts the 512 bit string into a 64 single. Do you think that is appropiate to use the euclidean distance as a metric? I want to cluster the descriptors and hamming distance is slower.

Also, do you think that using all pairs would make the descriptor more discriminant? How do you think that the selected pairs are affecting the results? I am using 15 scene dataset.

Thanks a lot,

Cristina

Hi Christina,

I don’t want to get into long discussions in the comments sections, so can you please mail me your questions to gil.levi100@gmail.com ?

If you could also explain about your project and perhaps send me some example images, I could advise you much better.

Gil

What about AKAZE descriptors? They do outperform all others and are only slightly more computationally complex than the earlier binary descriptors..

thanks-

G. Tom

Thanks for your comment, I will try to look into AKAZE (I need to test them anyway as part of my research:)

Hi, thanks a lot for the tutorials. As everyone said: they’re awesome!!

The question that I had has already been asked by “Güney Kayım” regarding the usage of the descriptor returned by MATLAB. I’m really curious to know your insights about this particular matter (64 uint8 descriptor utilisation).

All the best.

Hi Tarik,

Thank you for your kind words.

Matlab (and also OpenCV for that matter), returns a vector of 64 unit8 numbers. Convert each of the unit8 values to binary, concatenate them to a single binary vector and you will get 64*8 = 512 binary values. That’s the trick.

Gil.

Pingback: Adding rotation invariance to the BRIEF descriptor | Gil's CV blog

Awesome job, man. Very clear explanation.

Thanks!

Hey Gil,

First of all I would like to say that I recently found your blog and find it most interesting and informative. Although I red a LOT of papers regarding feature matching, detection and description, I find it much better to come to your blog for further understanding and confirmation of the underlying theory. Your way of explaining is very clear and concise, straight to the point. Thank you very much for putting so much effort into these.

I am a master student and I am attempting to implement fast and accurate object detection and tracking. The main research area is efficient implementation of related algorithms on heterogeneous multi-processor architectures and hardware acceleration in FPGA’s. Thus it is essential for me to perfectly understand these algorithms and how they are implemented in software. I emphesize on this, because I can’t just use some off the shelf implementations, such as in OpenCV or Matlab.

My main questions regarding FREAK are:

1. I see most of the pairs are learned from a training data-set and tend to the converge to a specific pattern (note the duality of concepts here). Is that the same pattern used to determine orientation or it varies?

2. As you know, having a defined and coherent pattern of pairs can lead to more efficient implementation in hardware and software (exploiting for example points that are 45 degrees apart from the center). Does such a pattern exist with a good trade-off between efficiency and accuracy?

3. By using for example a multiscale Harris corner detector, does the pattern also need to be rescaled? (This might be a dumb question, but I still want to make sure my assumptions are correct

4. Instead of trying to detect the key-points in each frame, I plan to track them instead using a Particle Filter. Thus my concept is to initially detect and match the points of interest and then use them to initialize a Particle filter algorithm to predict the position, scale and rotation of each point in further frames. Would the FREAK descriptor give good results for randomly generated keypoints around a previously detected keypoint? I red some papers regarding this method of tracking and I’m feeling confident that it would give good results, but still I’m having doubts whether FREAK would be the right descriptor for the task.

Thanks in advance and I’m looking forward for more publications from you!

Regards,

Viktorio

Hi Viktorio,

Thank you for your interest in my blog.

1. The sampling pairs which are used to measure orientation are different than the ones used for calculating the descriptor itself. The sampling pairs used to measure orientation are simply a pre-defined hand-crafted set of 45 symmetric sampling pairs.

2. I’m not sure I understand. Can you please elaborate? If you find the explanation long, you can mail me at gil.levi100@gmail.com.

3. Yes and no. If you’ll take a look into OpenCV’s code, you’ll see that ORB, BRISK and FREAK rescale the patch to account for scale changes, but BRIEF and the newly integrated LATCH (of which I’m the main author) do not.

4. I’m not sure actually, I don’t have much experience in tracking and I assume it really sums up to how difficult the video is. However, I don’t think it would be difficult to test it (on CPU) using OpenCV. I can help you with that, if you’d like.

Best,

Gil

Hello Gil,

Thanks for your reply!

1. Yes, I seemed to have missed this part from the paper. This makes things a lot easier. Thanks for pointing it out.

2. To elaborate a bit further on this, I simply want to avoid having a non-coherent list of pairs (as weird as that sounds). I intend to implement the full descriptor algorithm on an FPGA. Having a non-coherent selection of pairs (effectively a list generated from a training set (FREAK) or randomly (BRIEF) ) does not lead to efficient and modular synthesis of hardware. I try to avoid non-deterministic algorithms as much as possible.

3. Hmm, I thought you had an improved version of BRIEF that takes care of multiple scales. Anyways I ask this question, because I’m taking into account the line buffering required to sample using a pattern. I will take a look at your paper. I’m really looking for a light-weight, hardware friendly descriptor.

4. Never been much of a OpenCV person myself. I’m mostly sticking to MATLAB 😛 However I might reconsider, given the circumstances. Any help you can lend me is greatly appreciated.

My main idea is to restrict the search space of the detector/descriptor/matching algorithm, once an initial detection is accomplished, by means of tracking the regions of interest, as is proposed in several papers. (Don’t remember the exact names, but I can give you further information on that part if interested). My goal is to achieve robust real-time tracking of generic objects using interest point detectors and particle filters on an FPGA. As far as I am concerned, the full implementation for detection/description can all be done in hardware on a clock cycle basis (thus extracting regions as pixels arrive from a CMOS camera sensor) I might have to write you a more elaborate email about my idea and how my image-processing pipe-line seems to be forming up, again if you’re interested.

One final question – before reading your paper and getting into yet another descriptor, does mixing the FREAK pattern and pairs for orientation estimation and BRISK short pairs for description make any sense?

Thanks again!

Regards,

Viktorio

Hi,

2. Perhaps you can just use BRISK? it has a coherent set of sampling pairs.

3. “My” version of BRIEF is rotation invariant, not scale invariant (though I guess it won’t be too difficult to add scale invariance given an estimation of the patch scale from the detector).

4. I think it’s best to first implement a small POC in Matlab/OpenCV just to see that everything works before moving on. If you’d like to use Matlab, you have BRISK in Matlab and FREAK/ORB/BRIEF in mexOpenCV.

Combining FREAK orientation estimation and BRISK descriptor extraction might work, but again – you’ll have to implement and test to know how well it performs.

Best,

Gil

Thank you for the article. I am a bit confused on “Learning the Sampling Pairs”. Could you please tell me if what I’ve mentioned below is correct or not?

1) There are ‘m’ number of keypoints(corners) in an image and for each of the ‘m’ , ‘n’ sampling points are taken. Altogether, there will be C(n, 2) number of sampling pairs, where C(n, 2) indicates combination of ‘n’ numbers taking 2 at a time.

2)Then, create a matrix of size m* C(n,2) indicated by M.

3)Find the mean of each column of M.

4)Sort columns on decreasing variance, i.e the one with mean 0.5 first

5)Now, if I want to compare two keypoints P and Q, I should take the rows corresponding to the keypoints and compare their Hamming Distances.

Do we require different datasets for learning sampling pairs here as in ORB, where you mentioned “PASCAL 2006 dataset”? You pointed out that learning sampling pairs for FREAK is same as ORB’s. So, I am confused as to why I would require a dataset.

Hi,

Thank you for your interest in my blog.

The training set is done offline. The list you’ve done is almost correct:

1) There are ‘m’ number of keypoints(corners) in an image and for each of the ‘m’ , ‘n’ sampling points are taken. Altogether, there will be C(n, 2) number of sampling pairs, where C(n, 2) indicates combination of ‘n’ numbers taking 2 at a time.

2)Then, create a matrix of size m* C(n,2) indicated by M.

3)Find the mean of each column of M.

4)Sort columns on decreasing variance, i.e the one with mean 0.5 first

5) *** Now, just take the top 512 sampling pairs and they will be the ones we will use from now on ***

6)Now, if I want to compare two keypoints P and Q, I will apply only the 512 sampling pairs that I chose before. Actually, I will only use those sampling pairs from now on for every image and every keypoint.

Regarding database for learning, the FREAK authors don’t state in which database they have used. They do not reference the Pascal dataset, so I guess they have used a different one. Basically, their method is very similar in learning sampling pairs, only that FREAK has a predefined sampling pattern (a set of concentric circles) where ORB uses arbitrary pairs.

Best,

Gil.

thank you again, Gil. Everything is working pretty well now and I am very much pleased that I got to read your blog.

How do u get the sampling pair? using BRISK I have found the key points and extracted the features. How could I get the sampling points in order to implement in FREAK descriptor in matlab

The sampling pairs are fixed beforehand. I’m not sure where to get the sampling pairs for BRISK, but the sampling pairs for FREAK are available in the OpenCV implementation: https://github.com/opencv/opencv_contrib/blob/master/modules/xfeatures2d/src/freak.cpp

Pingback: Performance Evaluation of Binary Descriptor – Introducing the LATCH descriptor | Gil's CV blog

Glad to help!

Gil

Hey

CAn you tell me what is the difference between BRUTEFORCE_HAMMING and BRUTEFORCE_HAMMINGLUT? which one is more proper for AKAZE or ORB?

Best

Hi Reza,

Here’s a post on the subject from OpenCV’s Q&A forum:

http://answers.opencv.org/question/14402/the-difference-between-bruteforce-hamming-and-bruteforce-hamminglut/

Best,

Gil

Hi Gil,

I found your article on FREAK descriptor really interesting and helpful. At this moment, I have been doing evaluation on binary descriptors, such as ORB, BRIEF, BRISK, LATCH, etc.

However, I am having trouble getting FREAK to work properly (in OpenCV) when doing descriptor matching benchmark.

I am wondering if you might have some tips or tricks you could share regarding this…

Thanks in advance!

Justin.

What have you tried? which OpenCV version?

Send me the details to gil.levi100@gmail.com and I’ll try to help.

Hi,

Can you explain how the variance between two pairs of binary vectors is calculated ?

Yes, the variance is calculated per binary test (== pair of pixels comparison). For each binary test, we calculate its value on a large number of patches. Then we have a large binary vector of that binary test performance and we calculate its variance.

Thank u so much this is actually helped me alot…. This is my first time being introduced to computer vision and I really want to know more on brisk is there something you recommend and with Matlab

Hi, There is a brisk implementation in OpenCV which you can use.