Lately, I’ve been reading a lot about BOW (Bag of Words) models [1] and I thought it would be nice to write a short post on the subject. The post is based on the slides from Li Fei-Fei taken from ICCV 2005 course about object detection:

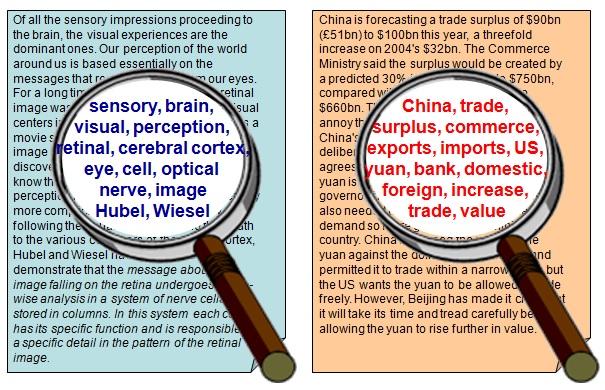

As the name implies, the concept of BOW is actually taken from text analysis. The idea is to represent a document as a “bag” of important keywords, without ordering of the words (that’s why it’s a called “bag of words”, instead of a list for example).

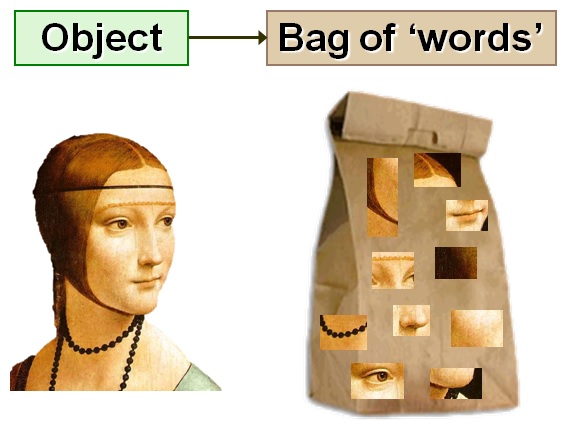

In computer vision, the idea is similar. We represent an object as a bag of visual words – patches that described by a certain descriptor:

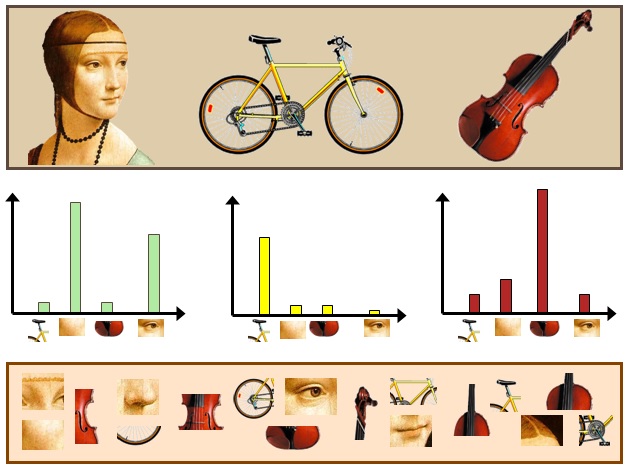

We can use the bag of words model for object categorization by constructing a large vocabulary of many visual words and represent each image as a histogram of the frequency words that are in the image. The following figure illustrates this idea:

How exactly do we construct the model? First, we need to build a visual dictionary. We do that by taking a large set of object images and extracting descriptors from them (for example, SIFT’s[2]) on a grid or from detected keypoints (see the post on descriptors if you’re not sure about their usage).

Next, we cluster the set of descriptors (using k-means for example) to k clusters. The cluster centers act as our dictionary’s visual words.

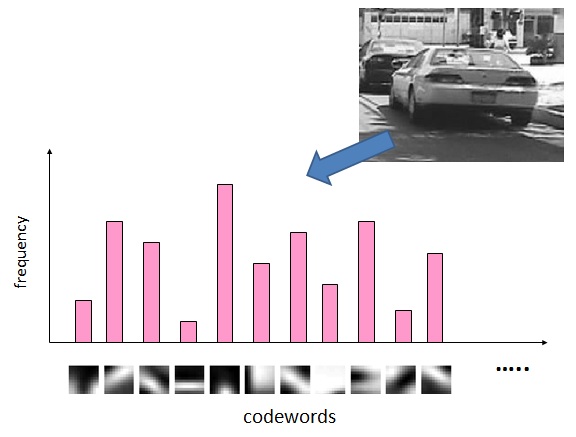

Given a new image, we represent it using our model in the following manner: first, extract descriptors from the image on a grid or around detected keypoints. Next, for each descriptor extracted compute its nearest neighbor in the dictionary. Finally, build a histogram of length k where the i’th value is the frequency of the i’th dictionary word:

This model can be used in conjunction with Naïve-Bayes classifier or with an SVM for object classification[1].

There is a nice demonstration in Vlfeat of a SIFT based BOW model and SVM for object classification on the Caltech101 benchmark.

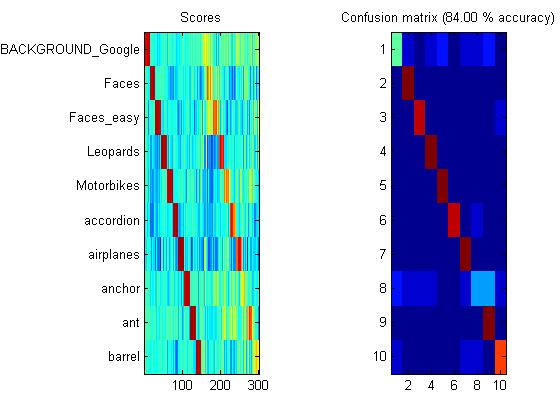

I ran a tiny example of the code using only 10 classes, 15 images for training and 15 images for testing and got the following confusion matrix:

The confusion matrix shows the score that each classes’ training set got when running each of the classes’ classifiers on them – the (i,j) element is the result of applying class j classifier on class i training set. As you can see, the top scores are on the diagonal, meaning the each class top score was obtained where running each class’s classifier on its training data.

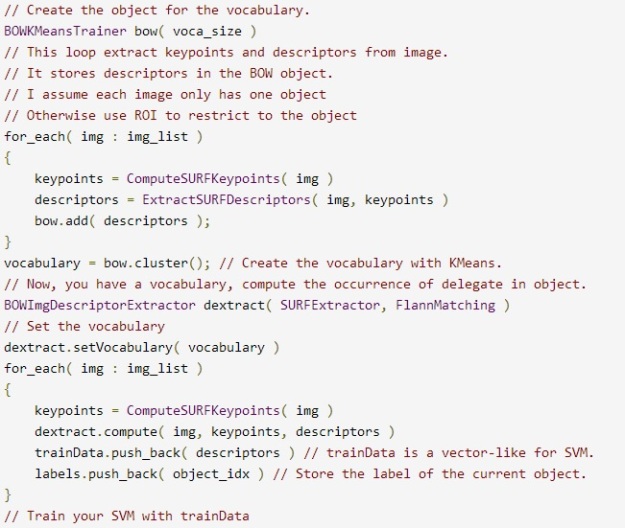

OpenCV also has a module for BOW model classification that you can read about here. There are many blog post about BOW OpenCV usage, so I won’t go into detail, but just give a simple pseudo code that explain the general usage(thanks to Mathieu Barnachon from OpenCV Q&A forum!):

Stay tuned for the next posts that will talk about binary descriptors.

Gil.

References:

[1] Csurka, Gabriella, et al. “Visual categorization with bags of keypoints.”Workshop on statistical learning in computer vision, ECCV. Vol. 1. 2004.

[2] Lowe, David G. “Object recognition from local scale-invariant features.”Computer vision, 1999. The proceedings of the seventh IEEE international conference on. Vol. 2. Ieee, 199

Hi, This looks like C++ code. Could you tell me if bag of words model is available for python as well?

Hi,

I’m not that familiar with python packages, but I found this post that might be useful:

Gil.

Hi,

Thanks for the wonderful post. Once I have a object classified into a label, is it possible to draw a bounding box around that object. My frame would have object and other things as well but I would like to draw a bounding box only around the object.

In my experiments, I implemented a sliding window approach. In short, I classified each window and merged the windows with scores above a threshold. Then, I drew the merged windows. If the classification was correct, those merged windows were around the detected objects.

So for each frame of a video, a sliding window has to be taken and classified using the classifier. Is that right? If I use a 1 to all classifier, would merging all the windows that gets classified as 1 would give the total object? What about false positives?

Is there a sample for the sliding window approach that I can look at. Thanks

Yes, you should merge the windows that are marked as positive (or their score is above a threshold) and that should roughly give a bounding box around the object. False positives can always occur, as in any model or algorithm.

You can mail me at gil.levi100@gmail.com and I’ll send you an example of a sliding window approach.

Gil.

I am using Bag of visual words for classification. I have quantized SIFT descriptor into 100 words for each image and encoded the histogram of the images and have completed classification.

Now, I want to try to combine two different descriptors and detectors i.e. SIFT and SURF, which means neither the number of key points will be the same nor will be the descriptor dimensionality (SIFT 128D and SURF 64D).

What will be the easiest way to combine them?

If, for each image, I encode one histogram for SIFT (which will be a 100×1 histogram) and another for SURF (another 100×1) and then stack them together making 200×1 histogram, will that be correct?

Any other way?

Thanks a lot in advance.

You can use multiple kernel learning. Mail me at gil.levi100@gmail.com and I’ll send you some references.

Gil.

I am interted in reading Bag of words. Your post is a good reference.

Thank you.

Reblogged this on josephdung.

Hi, I’d like to use one of your illustrations in my lecture on object detection, is that OK?

In particular I’d like to use the one with the woman, bike and violin…

Thanks!

Hannah

Of course, I actually wrote the post using the slides from Li Fei-Fei taken from ICCV 2005 course about object detection:

Gil.

Pingback: 12 – Bag of Words models for visual categorization – Official Offeryour.com Blog

Pingback: 12 – Bag of Words models for visual categorization – Exploding Ads

Great post! Definitely enjoyed the writeup.

Thanks!

Hi,

I have gone through your many tutorials – all are great. Thank you.

I have worked with bag of feature mainly with CV_32F (float) type data.

Recently I am experimenting with different binary descriptors such as ORB, BRIEF, FREAK etc. The output of binary descriptors are in CV_8U type.

My question – is it possible to generate visual vocabulary with binary descriptors output (CV_8U data type)? If yes – then how I can proceed?

Thank you in advance.

–Neel

Hi Neel,

Thanks for your interest in my blog.

Using binary descriptors with OpenCV’s BOW is indeed problematic. There were many posts on the subject on the OpenCV Q&A forum:

http://answers.opencv.org/question/24835/is-there-a-way-of-using-orb-with-bow/

http://answers.opencv.org/question/17460/how-to-use-bag-of-words-example-with-brief-descriptors/

http://answers.opencv.org/question/25898/how-to-use-brief-orb-freak-or-integer-descriptor-with-cvbow/

Some recommend just converting the features to CV_32F. However, I don’t think this is a good solution. People tend to forget that the output of OpenCV’s binary descriptors are uchars where a single uchar value is the decimal representation of 8 bits in the descriptors. Simply converting to CV_32F would mean that the distance measure would operate on the uchars that range from 0 to 255. This has nothing to do with the real Hamming distance between the descriptors. For example, take the following uchars values: 128 and 0. Their L2 distance is very large: sqrt(128), but their Hamming distance is only 1. Moreover, the Kmeans with Hamming distance is not the same as Kmeans with the L2 metric, which is what OpenCV’s BOW uses.

I think the proper solution is the following: take the uchar values. convert them to binary and then cast them to CV_32F. Also, you don’t want to use the L2 metric. Since for binary vectors, the L1 metric is equivalent to the Hamming metric, you should change it to the L1 metric. This requires some code changes:

In kmeans.cpp, change from normL2Sqr_ to normL1_ (which is defined in base.hpp).

I hope that would do the trick. Please let me know if you have any other questions on the subject.

Best,

Gil.

Thanks for your nice post. Do you have any idea about changing this into video classification?

Hi,

There’s a model of space-time interest points which is similar to the bag of words model, but is used instead for video classification (for example, action recognition). See here:

http://www.di.ens.fr/~laptev/interestpoints.html

Gil.

Pingback: Love affair with CBIR Part 3 | Technical Insanity

Hi,

I am trying to implement bag of feature with sift and knearest. I have 15 different scenes. 1500 train image and nearly 3000 test image. But when I implement algorithm, my accuracy is nearly %25 but I expected that at lest 50% or more. How can i improve this performance? Or what is the problem? [Project description][1]

int dictionarySize = 400;

Ptr matcher = DescriptorMatcher::create(“FlannBased”);

Ptr detector = xfeatures2d::SiftFeatureDetector::create();

Ptr extractor = xfeatures2d::SiftDescriptorExtractor::create();

TermCriteria tc(CV_TERMCRIT_ITER, 10, 0.001);

int retries = 1;

int flags = KMEANS_PP_CENTERS;

BOWKMeansTrainer bowTrainer(dictionarySize, tc, retries, flags);

Mat unclustered;

for (int index = 0; index < trainImg.size(); index+=10) {

vector keypoints;

Mat descriptor;

Mat tmp = trainImg[index];

detector->detect(tmp,keypoints);

extractor->compute(tmp,keypoints,descriptor);

unclustered.push_back(descriptor);

}

Mat vocabulary = bowTrainer.cluster(unclustered);

BOWImgDescriptorExtractor bowDE(extractor, matcher);

bowDE.setVocabulary(vocabulary);

Mat trainingData(0, dictionarySize, CV_32FC1);

Mat labels(0, 1, CV_32FC1);

for (int index = 0; index < trainImg.size(); index+=10) {

vector keypoints;

Mat descriptor;

Mat tmp = trainImg[index];

detector->detect(tmp,keypoints);

bowDE.compute(tmp,keypoints,descriptor);

trainingData.push_back(descriptor);

labels.push_back((float)trainLbl[index]);

}

Mat testData(0,dictionarySize,CV_32FC1);

Mat results(0,1,CV_32FC1);

for (int index = 0; index < testImg.size(); index+=10) {

vector keypoints;

Mat descriptor;

Mat tmp = testImg[index];

detector->detect(tmp,keypoints);

bowDE.compute(tmp,keypoints,descriptor);

testData.push_back(descriptor);

results.push_back((float)testLbl[index]);

}

kNearestNeighbor knn(trainingData,labels,testData,results);

[1]: http://cs.brown.edu/courses/cs143/proj3/

Hi,

Can you please mail me the code to

gil.levi100@gmail.com.

Thanks,

Gil

Hi, I have a doubt as to whether I use the images of all base to generate the BOW dictionary, or should I use only in the training images. I am getting very different results with both approaches

Hi,

For generating the visual vocabulary you should only use the training images. Using the test images as well will be overfitting.

Cheers,

Gil

Thank you for your great explanation.

I have a question:

Can I use Binary descriptor for example FREAK and apply K-Means Clustering?

Regards,

Esther

Yes, but the distance metric for K-Means has to be the hamming distance, not L2 which is commonly used.

Pingback: Bag of words – easytired的雜記

Thank you very much for your post, it helped me a lot to create my Bag of Visual Words.

Now, for qualitative analysis, I need to display the visual words of the image. How did you obtain the histogram with the image of the car and the pixel area corresponding to the visual words?

Thank you in advance

Thank you for your interest in my blog. In order to obtain such histogram, you should apply the same feature transform you use to build the visual dictionary on your image and then for each descriptor in the image, find the nearest neighbor in the dictionary. The histogram is then the number of features correspond to each visual word in the dictionary.

Hi! Thank a lot for your answer. I manage to obtain the histogram but I’m now sure if I found the tiny image (the “visual word”) of the plot in the correct way. You can find here (https://stackoverflow.com/questions/49337793/bag-of-visual-words-opencv-python-visualize-visual-word-on-image) my question in detail.

Thank you very much for your help.